- Determine What is Important—What is most important to your organization is likely different than that of your peers at other organizations, albeit somewhat similar in certain regards. Monitoring everything isn’t realistic and may not even be valuable if nothing is done with the data that is being collected. Zero in on the key metrics that define success and determine how to best monitor those.

- Break it Down into Manageable Pieces—Once you’ve determined what is important to the business, break that down into more manageable portions. For example if blazing fast website performance is needed for an eCommerce site, consider dividing this into network, server, services, and application monitoring components.

- Maintain an Open System—There is nothing worse than being locked into a solution that is inflexible. Leveraging APIs that can tie disparate systems together is critical in today’s IT environments. Strive for a single source of truth for each of your components and exchange that information via vendor integrations or APIs to make the system better as a whole.

- Invest in Understanding the Reporting—Make the tools work for you, a dashboard is simply not enough. Most of the enterprise tools out there today offer robust reporting capabilities, however these often go unimplemented.

- Review, Revise, Repeat—Monitoring is rarely a “set and forget" item, it should be in a constant state of improvement, integration, and evaluation to enable better visibility into the environment and the ability to deliver on key business values.

The Art of Simplicity is a Puzzle of Complexity

Experiencing the Connected Mobile Experiences

Bluetooth World - Day One Recap

It’s WLPC Time Again

Designing Wireless Networks for Clinical Communications

Healthcare presents one of the most challenging wireless environments in today's networking world. The unique blend of critical network applications and expectation of high speed ubiquitous wireless access for everyone is challenge enough and then numerous devices are layered on top. Clinical communications are critical to providing a high quality of care and has become an especially challenging environment to plan for. This post is intended to offer some guidance in designing these networks.

The Emergence of the Smartphone as a Clinical Communications Tool

Smartphones are joining the healthcare scene at increasing rates, companies such as Voalte, Mobile Heartbeat, PatientSafe and Vocera are bringing new features and functionality to market and are transforming communications at the point of care. These devices are typically either Apple iPhones or the Motorola MC40, however plenty of other variations exist. Each of these phones have numerous differences in how they behave. This differences vary from when they roam to how they handle packet loss, etc.

Access Point Transmit Power

In preparing to design for a clinical communications network a desired endpoint should be known. In almost all cases, Smartphones tend to have lower transmit power than what most admins are used to. As a result, we are designing wireless networks with transmit power of 10-12dBm rather than 14-17dBm as many legacy networks were built. This reduction in access point transmit power drives up the number of access points required to cover a facility by 25-50% depending on construction.

Data Rates

Disable lower data rates to reduce network overhead and functional cell size.

Access Point Placement

Fast roaming is critical to the performance of Voice over WiFi and for Smartphones this typically means leveraging 802.11r and 802.11k. Understanding how these protocols work and their impact on roaming is essential for success of any network being designed to support clinical communications. As a wireless engineer tasked with this design, the goal is to create small, clearly delineated cells with enough overlap to facilitate the roaming behavior of these mobile devices. If designed poorly, 802.11k can be a detriment to device roaming. Some general guidelines to follow:

- Access points should be mounted in patient rooms and out of hallways whenever possible

- Leverage interior service rooms to cover longer hallways--clean storage, food prep, case management offices, etc.

- If you must place an AP in a hallway

- consider planning to use short cross unit hallways rather than the long hallways wherever possible

- consider using alcoves to your advantage to reduce the spread of the RF signal

- Leverage known RF obstructions to help create clean roaming conditions that favor 802.11k

- Overlap may need to be as much as 20% due to roaming algorithms in the smartphones

- Pay attention to the location of patient bathrooms, facilities where these rooms are in the front of the patient room (near hallway) offer far more challenges than those where it is in the back of the room

- Stagger APs between floors such that they are not vertically stacked on each other

Voice SSID

Configure for a single band whenever possible - you'll find that some vendors are still only comfortable with 2.4GHz. From experience this can work, but is not without issues either. As a general rule, I recommend using AppRF to view the applications using the SSID and prioritize them properly. Smartphones are always talking via multiple apps on multiple ports and this should be accounted for.

All Apps Are Not Created Equal

Certain mobile communications apps are simply not ready for the demands of a healthcare environment. Take the time to understand exactly how these apps are being used, on multiple occasions I've seen perceived "dropped" calls as an app issue rather than anything to do with the wireless network itself.

Test, Test, Test

One Company's Journey Out of Darkness, Part VI: Looking Forward

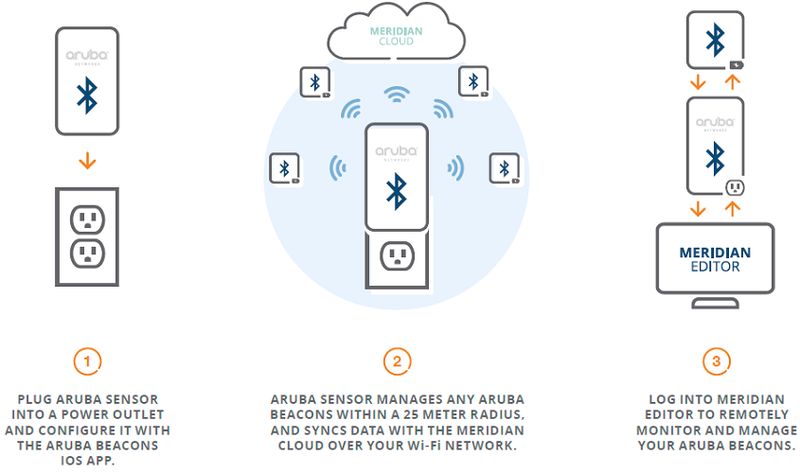

Aruba Networks Sensors Everything